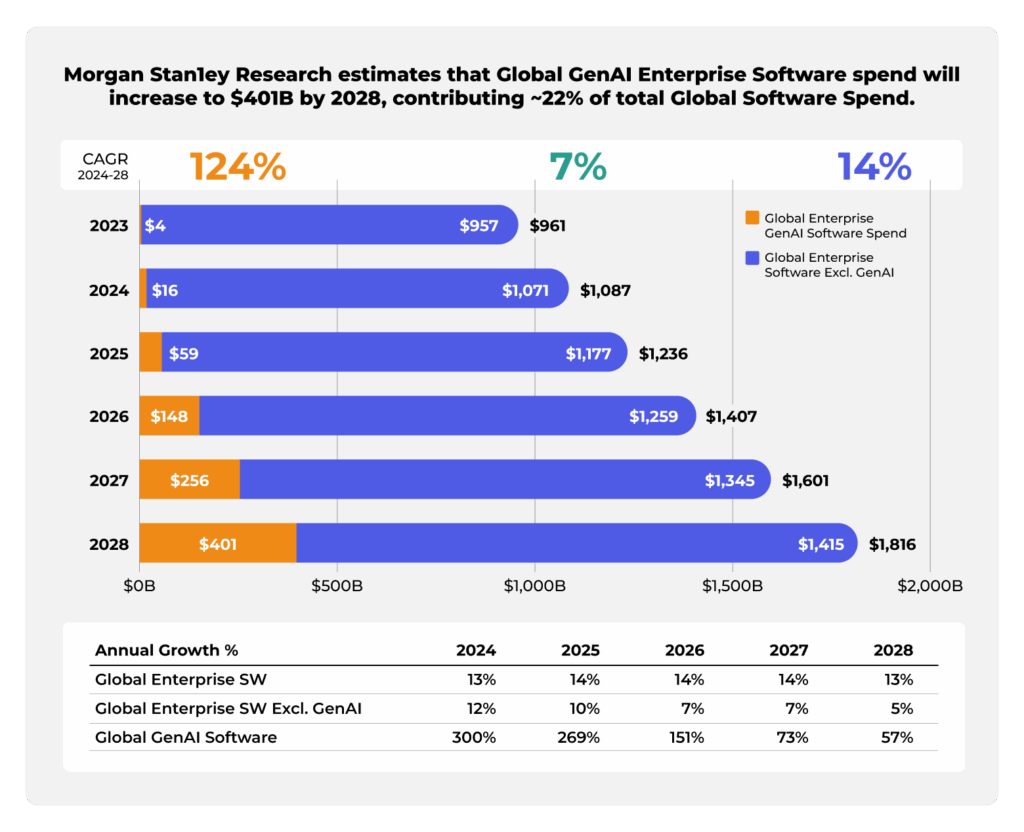

Enterprise adoption of generative AI (GenAI) is poised for explosive growth, fundamentally reshaping IT budgets.

GenAI has become the No. 1 technology discussed at the board level. Morgan Stanley Research forecasts a 20-fold surge in GenAI-driven software revenue over the next three years, with annual enterprise GenAI spend reaching roughly $400 billion by 2028 – about 22% of all software spending by that point. In other words, by 2028 more than one in five software dollars could be going to GenAI solutions.

This boom reflects soaring expectations: after GenAI’s breakout, investors and executives alike anticipate a new cycle of tech transformation and ROI. Indeed, early signs of ROI are emerging, with analysts noting that 2025 is on track to be the first year of positive ROI from GenAI initiatives.

Yet amid this “gold rush,” a reality check is needed: capturing that $400B opportunity won’t be easy.

Enterprise GenAI is not plug-and-play; early momentum has revealed serious growing pains in scaling from nifty demos to business-critical deployments. For all the hype, many organizations remain stuck in experimentation mode. A 2024 Gartner report found that only 48% of AI prototypes make it into production.

In other words, the GenAI boom risks a bust if enterprises can’t industrialize these initiatives. The biggest obstacles aren’t fancy model architectures or algorithms; they’re the unsexy basics of enterprise data and governance.

Why GenAI Initiatives Stall

The majority of AI projects never make it into production. Why not? Talk to any IT leader piloting GenAI, and the conversation quickly shifts from model magic to data drudgery. Organizations are finding that small proofs-of-concept built on sample data or third-party APIs crumble when confronted with the messy reality of enterprise data.

Data fragmentation is a top culprit. Valuable information is scattered across on-premises systems and clouds, stuck in silos that GenAI models struggle to reach. In a 2025 CIO survey, nearly all business leaders admitted to significant AI data woes – citing duplicate records and inefficient data integration as primary blockers to AI success. This fragmentation makes it hard to assemble the comprehensive, high-quality datasets that GenAI needs for training and accurate outputs.

Even when data can be wrangled from its diverse locations and sources, data quality issues can poison GenAI efforts. Many pilot projects uncover that their data is incomplete, inconsistent, or poorly labeled, a recipe for underwhelming model performance. Without robust data governance and cleaning, GenAI models simply generate garbage faster. As one CTO quipped, “AI ambition alone doesn’t translate to execution success without data readiness.”

Then there’s the hurdle of compliance and risk management. According to Harvard Business Review, this accounts for 29% of failed GenAI projects. Feeding enterprise data into cloud-based AI raises red flags around privacy, IP protection, and regulatory compliance. Many GenAI pilots run headlong into internal compliance bottlenecks, with lawyers and risk officers tapping the brakes due to fears of sensitive data leakage or unknown biases. Impatient executives want ROI but also worry about unchecked risks and costs. For regulated industries (finance, healthcare, government, etc.), these concerns are especially acute. Data privacy laws (like HIPAA in healthcare, GDPR in Europe) restrict data movement, and GenAI initiatives often trigger intensive reviews to ensure these regulations aren’t breached.

This helps explain why finance and legal functions show the slowest GenAI adoption – legal in particular, where 38% of teams haven’t even considered its use as of 2024.

It’s no surprise, then, that a wave of GenAI projects stalls in “POC purgatory”; they’re impressive in demos but unable to overcome enterprise data hurdles to reach production. Fragmented data, lack of trustable data quality, and compliance roadblocks have become the trio of challenges suffocating GenAI initiatives in the cradle. The ambitious vision is there, but the foundation, a unified, governed, compliant data backbone, is often missing.

How do enterprises bridge this chasm between GenAI’s promise and the reality of their legacy data environments?

Hybrid GenAI Is the Way Forward

Increasingly, enterprises conclude that a part of the answer is a hybrid cloud architecture, which is fast becoming more than IT strategy but de facto GenAI strategy.

Sid Nag, VP Analyst at Gartner, put it bluntly, predicting that 90% of organizations will adopt this approach through 2027. He stated: “The most urgent GenAI challenge to address over the next year will be data synchronization across the hybrid cloud environment.” In other words, virtually every company is heading down a path where the number one priority is synchronizing data across both on-premises and cloud realms.

Why choose this approach? Because it uniquely solves the thorniest problems hobbling GenAI efforts. A well-designed hybrid cloud (combining local infrastructure with private and public clouds working in tandem) lets enterprises deploy GenAI where it makes most sense for their data, performance needs, and risk profile. It leverages the cloud’s massive compute power without surrendering control over data or abdicating compliance responsibilities.

In essence, hybrid cloud offers a “best of both worlds” platform: keeping critical data and workloads in controlled environments while tapping cloud-scale resources when needed.

Let’s break down the specific advantages:

- Data Control & Sovereignty: A hybrid cloud approach gives enterprises control over where sensitive data lives and how it’s handled. Organizations can keep critical data on-premises or in private clouds to meet sovereignty requirements, such as keeping EU citizen data within borders, or to ensure no data leaves the firewall without oversight. For example, banks and defense firms often run LLMs in-house to keep customer data internal. A global bank might fine-tune Llama on private servers for proprietary research while using the public cloud for non-sensitive tasks. This approach avoids vendor lock-in and blind trust by letting companies choose what stays local. It also addresses fragmentation by connecting on-premises systems, private lakes, and public buckets to deliver a unified view without centralizing everything.

- Performance Optimization & Low Latency: GenAI workloads vary wildly in their compute needs. Training large models benefits from cloud-scale clusters, while real-time inference, like an AI assistant in an ER, demands low latency near the user. Hybrid architecture allows workload placement based on need: batch processes such as training and RAG document ingestion can burst to cloud GPU clusters, while inference runs on edge or local servers for instant response. This flexibility avoids cloud round-trips, reduces bandwidth costs, and ensures performance where it matters. Hybrid setups also help teams use cloud scale when needed, without paying cloud costs for every task. In short, compute moves to where the data or users are, not the other way around.

- Unified Governance & Security: Hybrid cloud enables centralized governance across environments. Instead of scattered shadow AI projects, enterprises can enforce consistent policies, access controls, and audit trails, whether data is local or in the cloud. Modern governance tools and data catalogs help maintain data quality and lineage across the stack. Security also benefits: Teams get visibility across all assets, applying zero-trust principles end-to-end. Identity systems can span both, encryption keys can be unified, and anomaly detection can cover the full hybrid network. This makes it possible to scale GenAI with confidence, without multiplying the risk of data leaks or compliance gaps.

- Regulatory Compliance & Data Privacy: Hybrid setups simplify compliance by letting organizations bring AI to the data, not the other way around. A healthcare provider, for example, can keep patient data in a HIPAA-compliant local system and run AI there. For general tasks, they can use cloud resources with anonymized data. This ensures that regulators know data isn’t being misused or moved improperly. The flexibility also helps with residency rules, e.g., using a government cloud region to stay within public sector standards. More broadly, hybrid models let teams tailor infrastructure to each dataset’s compliance profile, turning regulatory barriers into technical decisions about workload placement.

- Reduced Vendor Lock-In: Enterprises gain leverage by designing GenAI systems to operate across multiple environments – on-premises, private cloud, or any public cloud; they’re no longer boxed into a single provider’s ecosystem. Model training can happen on Azure this month and migrate to AWS next quarter, or inference can shift between local GPUs and Google Cloud depending on latency or cost. This flexibility reduces dependency on any one vendor’s APIs, pricing model, or uptime guarantees. It also enables portability of models, data pipelines, and orchestration layers, making it easier to adapt to evolving needs or negotiate better terms. In a rapidly shifting GenAI landscape, the ability to move fast without re-architecting everything is a major strategic advantage, and hybrid makes that possible.

In short, hybrid cloud architecture directly addresses the data control, performance, governance, lock-in, and compliance challenges that have plagued enterprise GenAI efforts. It creates a fabric where data can be synchronized and models can be deployed across environments seamlessly, which, as Gartner emphasized, is the critical challenge for the coming year. The endgame is to have GenAI pipelines that ingest data from anywhere, serve intelligence to users everywhere, and store outputs in the right place – all without violating trust, blowing the budget, or breaking compliance. This may sound ambitious, but it’s exactly the trajectory that leading enterprises and cloud providers are on.

From Data to Deployment: Hybrid at Every GenAI Stage

To truly grasp why hybrid cloud is emerging as “the only viable path” to GenAI, it helps to see its benefits at each stage of the GenAI lifecycle, from data ingestion through model deployment and ongoing maintenance:

- Ingestion & Preparation: Hybrid cloud excels at managing fragmented data without forcing everything into one location. For example, an insurer can keep sensitive policy data locally and customer logs in a cloud CRM. Such integration methods, like data virtualization, federated queries, and global file systems, let AI seamlessly access data from Azure, AWS, or private data centers without painful ETL cycles. Data preprocessing, such as conversion to AI-friendly formats and extracting metadata, can happen near the source (like IoT edge filtering) before sending curated data to the cloud, speeding up model training and RAG ingestion without large migrations. Crucially, these setups also allow sensitive data to be anonymized on-premises before reaching the cloud. Many GenAI initiatives failed when data couldn’t move to the cloud; hybrid solves this by bringing cloud AI to data in situ.

- Inference & Deployment: Hybrid cloud lets enterprises deploy AI models precisely where they best serve business needs. Global firms may use local, distilled models at branch offices or edge devices for quick responses, reserving more complex cloud-based models for sophisticated queries. In regulated environments, inference may remain entirely on-site. Hybrid setups often feature “private GPT” arrangements where local models handle sensitive internal queries, only routing broader queries externally after removing confidential details. Google’s Distributed Cloud (GDC) illustrates this by offering cloud-managed, fully local AI deployments, which are ideal for compliance, latency, and resilience. These deployments also ensure business continuity by enabling local AI operations if cloud services fail.

- Monitoring, Feedback & Auditing: Hybrid architectures simplify unified model monitoring across environments, centralizing telemetry data (such as latency, accuracy, drift). User feedback and sensitive usage audit logs, or chat histories, can remain compliant on-premises, even if inferences occurred in the cloud.

Each stage of GenAI benefits from hybrid flexibility, enabling enterprises to balance security with scale and speed with compliance. A recent Dell EMC survey found 82% of IT leaders prefer on-premises or hybrid GenAI approaches, recognizing that full cloud reliance creates unnecessary headaches. Hybrid architectures let enterprises use cloud services strategically, avoiding lock-in and maintaining portability.

GenAI Success Hinges on Infrastructure

GenAI projects don’t stall because of weak models. They stall in the POC purgatory because the data isn’t ready. Siloed, inconsistent, and sensitive data stops pilots from scaling.

Hybrid cloud is the path forward. It creates a fabric where data can be synchronized across environments and models can be deployed wherever they deliver the most value, all while staying compliant and in control.

To industrialize GenAI, enterprises need more than ambition. They need architecture that fits their reality. That starts with hybrid.

-

Aron Brand, CTO of CTERA Networks, has more than 22 years of experience in designing and implementing distributed software systems. Prior to joining the founding team of CTERA, Aron acted as Chief Architect of SofaWare Technologies, a Check Point company, where he led the design of security software and appliances for the service provider and enterprise markets. Previously, Aron developed software at IDF’s Elite Technology Unit 8200. He holds a BSc degree in computer science and business administration from Tel-Aviv University.

CTO